This post is part 1 in a 3-part series. Please check out part 2 and part 3 as well.

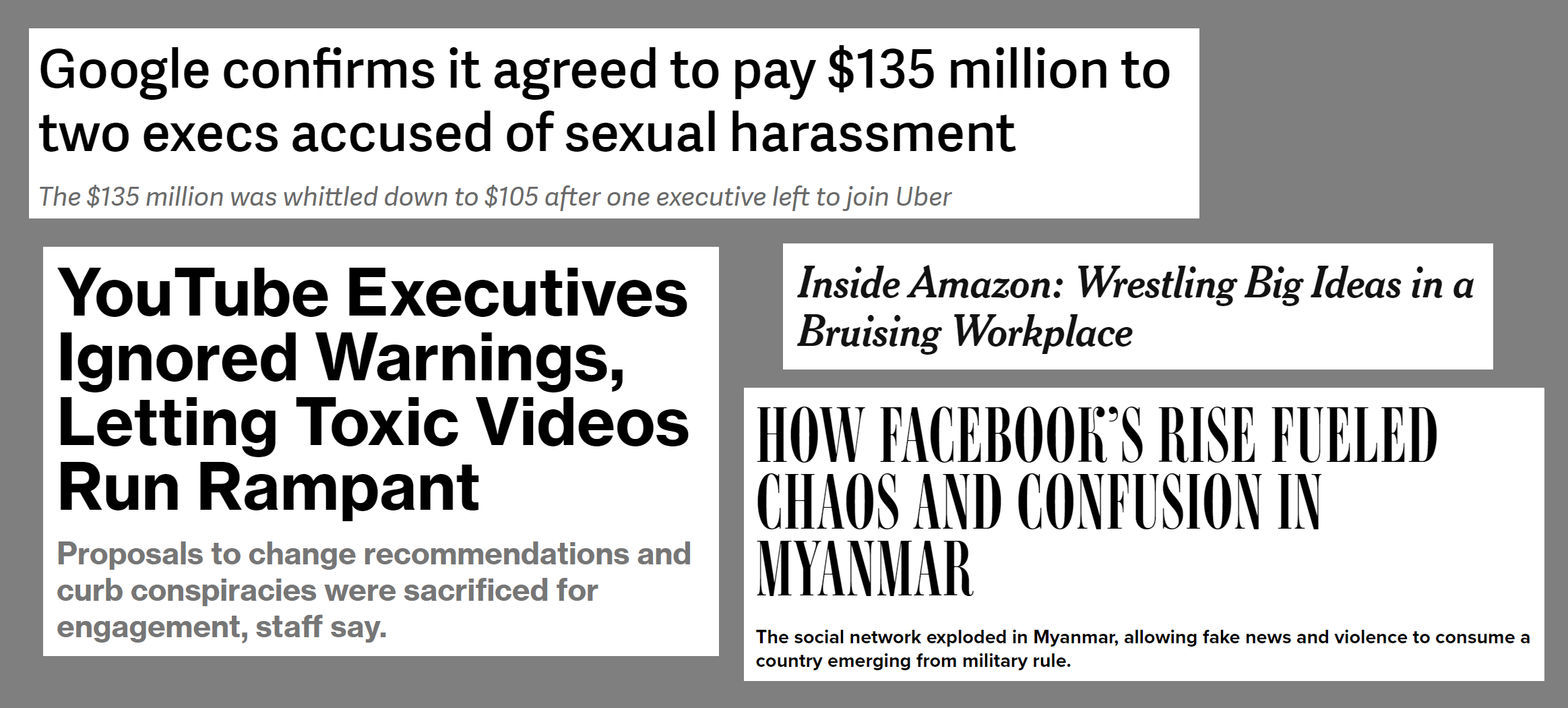

Even before Google announced the creation of an AI ethics committee that included the anti-trans, anti-LGBTQ, and anti-immigrant president of the Heritage Foundation (the committee was canceled a week later), we already knew where Google leadership stood on ethics. These are the same people that paid off executives accused of sexual harassment with tens of millions of dollars (and sent victims away with nothing); tolerated employees leaking the names and photos of their LGBT+ coworkers to white supremacist sites (according to a prominent engineer who spent 11 years at Google); and allowed a top executive to make misleading statements in the New York Times about a vital issue that is destroying lives. Long before Amazon started smearing the MIT researchers who identified bias in Amazon’s facial recognition technology, multiple women at Amazon Headquarters were given low performance reviews or put on performance improvement plans (a precursor to being fired) directly after cancer treatments, major surgeries, or having a stillbirth child. The topic of Facebook and ethics could (and does) fill a post of its own, but one key point is that Facebook executives were warned in 2013, 2014, and 2015 how their platform was being used to incite ethnic violence in Myanmar, yet failed to take significant action until the UN ruled in 2018 that Facebook had already playing a “determining role” in the Myanmar genocide. Since the leadership of top tech companies doesn’t seem concerned with “regular” ethics, of course they won’t be able to embody AI ethics either.

AI ethics is not separate from other ethics, siloed off into its own much sexier space. Ethics is ethics, and even AI ethics is ultimately about how we treat others and how we protect human rights, particularly of the most vulnerable. The people who created our current crises will not be the ones to solve them, and it is up to all of us to act. In this series, I want to share a few actions you can take to have a practical, positive impact, and to highlight some real world examples. Some are big; some are small; not all of them will be relevant to your situation, but I hope this will inspire you around concrete ways you can make a difference. Each post in this series will cover 5 or 6 different action steps.

- Checklist for data projects

- Conduct ethical risks sweeps

- Resist the tyranny of metrics

- Choose a revenue model other than advertising

- Have product & engineering sit with trust & safety

- Advocate within your company

- Organize with other employees

- Leave your company when internal advocacy no longer works

- Avoid non-disparagement agreements

- Support thoughtful regulations & legislation

- Speak with reporters

- Decide in advance what your personal values and limits are

- Ask your company to sign the SafeFace Pledge

- Increase diversity by ensuring that employees from underrepresented groups are set up for success

- Increase diversity by overhauling your interviewing and onboarding processes

- Share your success stories!

A Checklist for Data Projects

Ethics and Data Science (available for free online) argues that just as checklists have helped doctors make fewer errors, they can also help those working in tech make fewer ethical mistakes. The authors propose a checklist for people who are working on data projects. Here are items to include on the checklist:

- Have we listed how this technology can be attacked or abused?

- Have we tested our training data to ensure that it is fair and representative?

- Have we studied and understood possible sources of bias in our data?

- Does our team reflect diversity of opinions, backgrounds, and kinds of thought?

- What kinds of user consent do we need to collect or use the data?

- Do we have a mechanism for gathering consent from users?

- Have we explained clearly what users are consenting to?

- Do we have a mechanism for redress if people are harmed by the results?

- Can we shut down this software in production if it is behaving badly?

- Have we tested for fairness with respect to different user groups?

- Have we tested for disparate error rates among different user groups?

- Do we test and monitor for model drift to ensure our software remains fair over time?

Conduct Ethical Risks Sweeps

Even when we have good intentions, our systems can be manipulated or exploited (or otherwise fail), leading to widespread harm. Just consider the role of Facebook in the genocide in Myanmar and how conspiracy theories spread via recommendation systems led to huge decreases in vaccination rates, leading to rising death rates from preventable diseases (Nature has referred to viral misinformation as “the biggest pandemic risk”).

Similar to the way that penetration testing is standard in the area of info-security, we need to proactively search for potential failures, manipulation, and exploitation of our systems, before they occur. As I wrote in a previous post, we need to ask:

- How could trolls use your service to harass vulnerable people?

- How could an authoritarian government use your work for surveillance? There have even been multiple times when mass surveillance and data collection have played key roles in humanitarian crises or genocides)

- How could your work be used to spread harmful misinformation or propaganda?

- What safeguards could be put in place to mitigate the above?

Ethicists at the Markkula Center have developed an ethical toolkit for engineering design & practice. I encourage you to read it all, but I particularly wanted to highlight tool 1, Ethical Risk Sweeps, which formalizes my questions from above in a process, including by instituting regularly scheduled ethical risk-sweeping and rewarding team members for spotting new ethical risks.

Resist the Tyranny of Metrics

Metrics are just a proxy for what you really care about, and unthinkingly optimizing a metric can lead to unexpected, negative results. For instance, YouTube’s recommendation system was built to optimize watch time, which leads it to aggressively promote videos saying the rest of the media is lying (since believing conspiracy theories causes people to avoid other media sources and maximize the time they spend on YouTube). This phenomenon has been well documented by journalists, academic experts, and former YouTube employees.

Fortunately, we don’t have to just unthinkingly optimize metrics! Chapter 4 of Technically Wrong documents how in response to widespread racial profiling on its platform, NextDoor made a set of comprehensive changes. NextDoor overhauled the design of how users report suspicious activity, including a new requirement that users describe several features of the person’s appearance other than just race to be allowed to post. These changes had the side effect of reducing engagement, but not all user engagement is good.

Evan Estola, lead machine learning engineer at Meetup, discussed the example of men expressing more interest than women in tech meetups. Meetup’s algorithm could recommend fewer tech meetups to women, and as a result, fewer women would find out about and attend tech meetups, which could cause the algorithm to suggest even fewer tech meetups to women, and so on in a self-reinforcing feedback loop. Evan and his team made the ethical decision for their recommendation algorithm to not create such a feedback loop.

Choose a revenue model other than advertising

There is a fundamental misalignment between personalized ad-targeting and the well-being of society. This shows up in myriad ways: - Incentivizing surveillance by aligning increased data with increased profits - Incentivizing incendiary content, since that generates more clicks - Incentivizing conspiracy theories, which keep people on your platform longer - Manipulating elections - Racial discrimination in housing ads - Age discrimination in job ads

These issues could and should be addressed in many ways, including stricter regulations and enforcement around political ads, housing discrimination, and employment discrimination; revising section 230 to only cover content and not recommendations; and taxing personalized ad-targeting in the way that we tax tobacco (as something that externalizes huge costs to society).

Companies such as Google/YouTube, Facebook, and Twitter primarily rely on advertising revenue, which creates perverse incentives. Even when people genuinely want to do the right thing, it is tough to constantly be struggling against misaligned incentives. It is like filling your kitchen with donuts just as you are embarking on a diet. One way to head this off is to seek out revenue models other than advertising. Build a product that people will pay for. There are many companies out there with other business models, such as Medium, Eventbrite, Patreon, GitHub, Atlassian, Basecamp, and others.

Personalized ad targeting is so entrenched in online platforms, the idea of trying to move away from it can feel daunting. However, Tim Berners-Lee, founder of the world-wide web wrote last year: “Two myths currently limit our collective imagination: the myth that advertising is the only possible business model for online companies, and the myth that it’s too late to change the way platforms operate. On both points, we need to be a little more creative.”

Have Product & Engineering sit with Trust & Safety

Medium’s head of legal, Alex Feerst, wrote a post in which he interviewed 15 people who work on Trust & Safety (which includes content moderation) at a variety of major tech companies, roles that Feerst refers to being simultaneously “the judges and janitors of the internet.” As one Trust & Safety (T&S) employee observed, “Creators and product people want to live in optimism, in an idealized vision of how people will use the product, not the ways that people will predictably break it… The separation of product people and trust people worries me, because in a world where product managers and engineers and visionaries cared about this stuff, it would be baked into how things get built. If things stay this way—that product and engineering are Mozart and everyone else is Alfred the butler—the big stuff is not going to change.”

Having product and engineering shadow or even sit with T&S agents (including content moderators) is one way to address this. It makes the ways that tech platforms are misused more apparent to the creators of these platforms. A T&S employee at another company describes this approach, “We have executives and product managers shadow trust and safety agents during calls with users. Sitting with an agent talking to a sexual assault victim helps build some empathy, so when they go back to their teams, it’s running in the back of their brain when they’re thinking about building things.”

To be continued…

This post is the first in a series. Please check out part 2 and part 3, with further steps you can take to improve the tech industry. The problems we are facing can be overwhelming, so it may help to choose a single item from this list as a good way to start. I encourage you to choose one thing you will do, and to make a commitment now.