Today we are releasing a free, online course on Applied Data Ethics, which contains essential knowledge for anyone working in data science or impacted by technology. The course focus is on topics that are both urgent and practical, causing real harm right now. In keeping with the fast.ai teaching philosophy, we will begin with two active, real-world areas (disinformation and bias) to provide context and motivation, before stepping back in Lesson 3 to dig into foundations of data ethics and practical tools. From there we will move on to additional subject areas: privacy & surveillance, the role of the Silicon Valley ecosystem (including metrics, venture growth, & hypergrowth), and algorithmic colonialism.

If you are ready to get started now, check out the syllabus and reading list or watch the videos here. Otherwise, read on for more details!

There are no prerequisites for the course. It is not intended to be exhaustive, but hopefully will provide useful context about how data misuse is impacting society, as well as practice in critical thinking skills and questions to ask. This class was originally taught in-person at the University of San Francisco Data Institute in January-February 2020, for a diverse mix of working professionals from a range of backgrounds (as an evening certificate courses).

About Data Ethics Syllabi

Data ethics covers an incredibly broad range of topics, many of which are urgent, making headlines daily, and causing harm to real people right now. A meta-analysis of over 100 syllabi on tech ethics, titled “What do we teach when we teach tech ethics?” found that there was huge variation in which topics are covered across tech ethics courses (law & policy, privacy & surveillance, philosophy, justice & human rights, environmental impact, civic responsibility, robots, disinformation, work & labor, design, cybersecurity, research ethics, and more– far more than any one course could cover). These courses were taught by professors from a variety of fields. The area where there was more unity was in outcomes, with abilities to critique, spot issues, and make arguments being some of the most common desired outcomes for tech ethics course.

There is a ton of great research and writing on the topics covered in the course, and it was very tough for me to cut the reading list down to a “reasonable” length. There are many more fantastic articles, papers, essays, and books on these topics that are not included here. Check out my syllabus and reading list here.

A note about the fastai video browser

There is an icon near the top left of the video browser that opens up a menu of all the lesson. An icon near the top right opens up the course notes and a transcript search feature.

Topics covered

Lesson 1: Disinformation

From deepfakes being used to harass women, widespread misinformation about coronavirus (labeled an “infodemic” by the WHO), fears about the role disinformation could play in the 2020 election, and news of extensive foreign influence operations, disinformation is in the news frequently and is an urgent issue. It is also indicative of the complexity and interdisciplinary nature of so many data ethics issues: disinformation involves tech design choices, bad actors, human psychology, misaligned financial incentives, and more.

Watch the Lesson 1 video here.

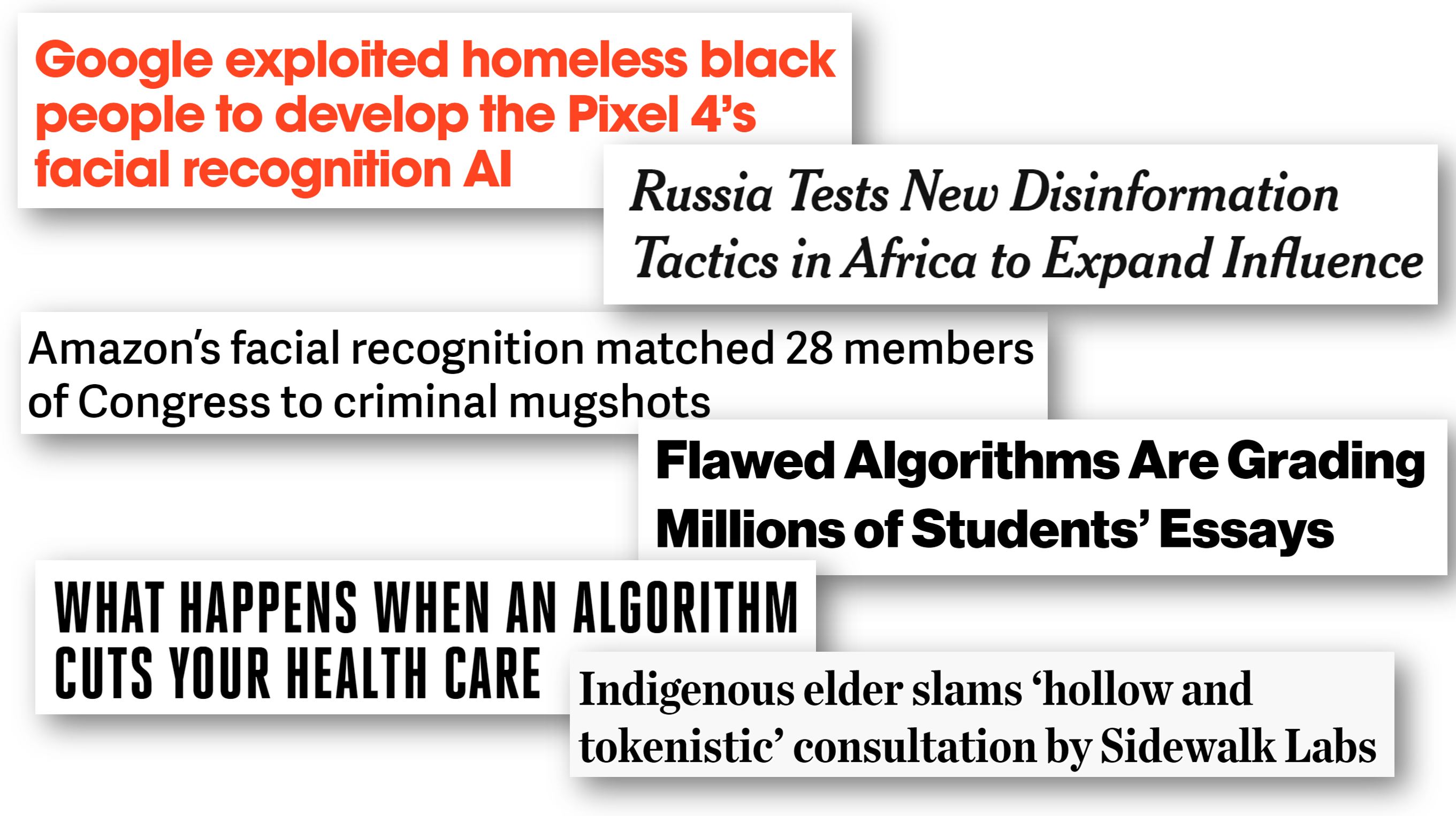

Lesson 2: Bias & Fairness

Unjust bias is an increasingly discussed issue in machine learning and has even spawned its own field as the primary focus of Fairness, Accountability, and Transparency (FAccT). We will go beyond a surface-level discussion and cover questions of how fairness is defined, different types of bias, steps towards mitigating it, and complicating factors.

Watch the Lesson 2 video here.

Lesson 3: Ethical Foundations & Practical Tools

Now that we’ve seen a number of concrete, real world examples of ethical issues that arise with data, we will step back and learn about some ethical philosophies and lenses to evaluate ethics through, as well as considering how ethical questions are chosen. We will also cover the Markkula Center’s Tech Ethics Toolkit, a set of concrete practices to be implemented in the workplace.

Watch the Lesson 3 video here.

Lesson 4: Privacy and surveillance

Huge amounts of data are being collected about us: apps on our phones track our location, dating sites sell intimate details, facial recognition in schools records students, and police use large, unregulated databases of faces. Here, we discuss real-world examples of how our data is collected, sold, and used. There are also concerning patterns of how surveillance is used to suppress dissent and to further harm those who are already marginalized.

Watch the Lesson 4 video here.

Lesson 5: How did we get here? Our Ecosystem

News stories understandably often focus on one instance of a particular ethics issue at a particular company. Here, I want us to step back and consider some of the broader trends and factors that have resulted in the types of issues we are seeing. These include our over-emphasis on metrics, the inherent design of many of the platforms, venture capital’s focus on hypergrowth, and more.

Watch the Lesson 5 video here.

Lesson 6: Algorithmic Colonialism, and Next Steps

When corporations from one country develop and deploy technology in many other countries, extracting data and profits, often with little awareness of local cultural issues, a number of ethical issues can arise. Here we will explore algorithmic colonialism. We will also consider next steps for how students can continue to engage around data ethics and take what they’ve learned back to their workplaces.

Watch the Lesson 6 video here.

For the applied data ethics course, you can find the homepage here, the syllabus and reading list and watch the videos here.