Summary: today we’re announcing fastchan, a new conda mini-distribution with a focus on the PyTorch ecosystem. Using fastchan, installation and updates of libraries such as PyTorch and RAPIDS is faster, easier, and more reliable.

This detailed blog post by the brilliant Aman Arora of Weights and Biases provides a great overview of what fastchan is for, how it relates to other parts of the ecosystem, and how it makes life easier in practice.

What you need to know

If you use Anaconda, you can now install Python software like fastai, RAPIDS, timm, OpenCV, and Hugging Face Transformers with a single unified command: conda install -c fastchan. The same approach can also be used to upgrade any software you’ve installed from fastchan. Software on fastchan has been tested to install successfully on Mac, Linux, and Windows, on all recent versions of Python. A full list of available packages is available here.

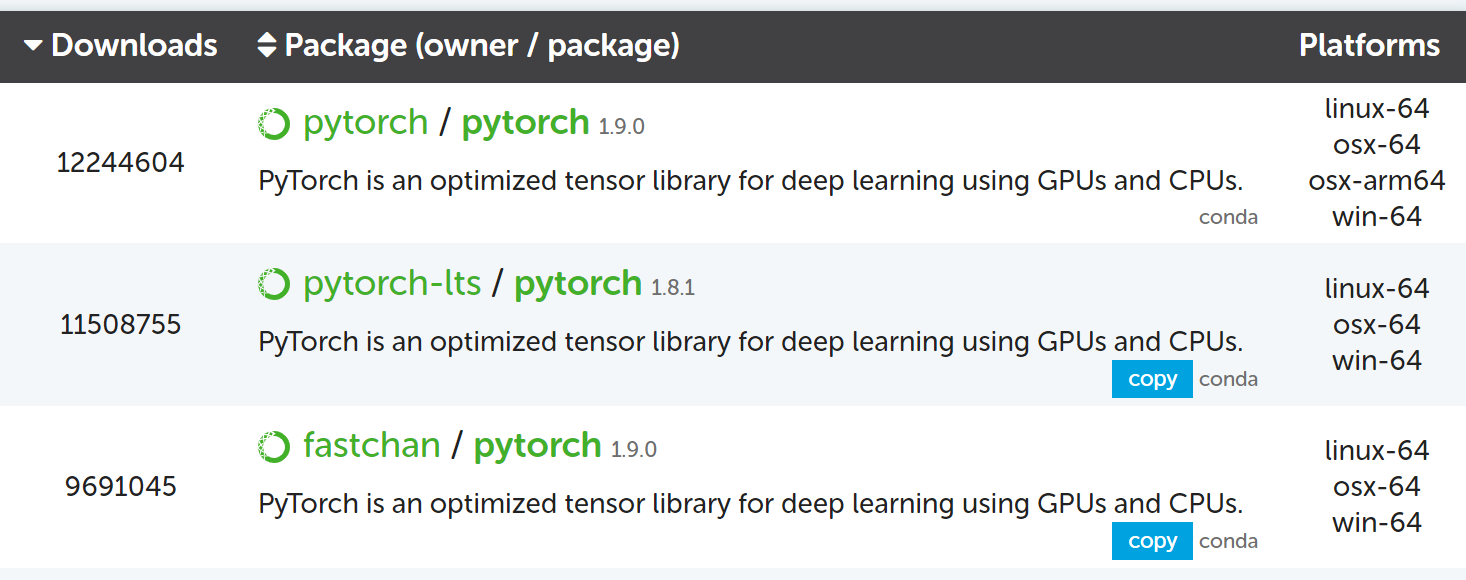

We’ve been testing fastchan for the last few months, and have switched the official installation source for fastai to use fastchan. According to Anaconda, it’s already nearly as popular as the PyTorch channel itself!

Background

conda is one of my favorite pieces of software. It allows me to install a huge range of software, such as Rust, GCC, CUDA Toolkit, Python, graphviz, and thousands more, without even requiring root access. It installs executables, C libraries, Python modules, and just about anything else you can think of. It also handles installing any needed dependencies automatically.

If you’ve used a Linux package manager like apt (Ubuntu) or yum (Fedora) then that will sound pretty familiar – conda is, basically, just another package manager. But rather than being used to install system packages that are used as part of the operating system, it’s used for installing your own personal software. That means that you can’t accidentally break your OS if you use conda.

It also can create separate self-contained environments with totally isolated software installations. That means I can create a quick throwaway environment to test some new software, without breaking my base environment, or even keep separate environments for different projects, which might require (for instance) different versions of Python or libraries.

Some people conflate conda with pip and virtualenv. However, these are just used for managing python packages. They can not manage executables, C libraries, and so forth.

conda installs software from channels, which are repositories of installation packages. The most widely used channels are defaults, which is used automatically by Anaconda, the most popular conda system installer, and conda-forge, a community repository to which thousands of developers have contributed conda packages.

Many organizations maintain their own channels, such as fastai, nvidia, and pytorch.

The distribution problem

You have likely heard the term distribution, as used in the Linux world. Distributions such as Ubuntu and Fedora provide scheduled releases of sets of software packages which have been tested to work correctly together. They also provide repositories where new versions of software are made available, after testing the software to ensure it works correctly as part of that distribution.

Anaconda and the defaults channel are also a distribution. On a regular basis, Anaconda release a new version of their main installer, along with a set of packages that have been tested to work correctly together. Furthermore, they continue to test new versions of packages between software releases, adding them to the defaults channel when they are ready.

Many of the packages in the defaults channel are sourced from conda-forge. conda-forge is not a distribution itself, but a repository for any user to upload “recipes” to build software, and to make available the results of those builds. Anaconda takes a subset of these packages, along with software they package themselves, and software packaged by other partners, does additional integration testing of the combination of these packages, and then makes them available in their distribution.

This system works very well in many situations. Those needing cutting-edge versions of software or packages not available in the defaults channel can install them directly from conda-forge, and those needing the convenience and confidence of a distribution can just install software from defaults.

However, many Python libraries are not in the defaults or conda-forge channels, or are not available in any channel at all. Furthermore, conda-forge is now so large (thanks to its great success!) that I’ve had to wait over two days for conda to figure out the dependencies when trying to install software that uses conda-forge. (There is a much faster conda replacement called mamba, but it is not yet feature complete, and can not currently install PyTorch correctly.)

Libraries which use the GPU are a particular issue, since conda-forge does not yet have facilities for building and testing GPU-enabled software. Furthermore, GPU libraries are particularly difficult to package correctly, needing to work with many combinations of versions of CUDA, cudnn, Python, and OS. There is a large ecosystem of software that depends on PyTorch, and the PyTorch team has set up a large number of integration tests that are run before each release. PyTorch also has its own custom framework for building the software, resulting in packages that automatically identifies the correct installer for each user.

These issues result in complex commands to install packages in this ecosystem, such as this command that’s currently required for installing NVIDIA’s powerful RAPIDS software:

conda create -n rapids-21.06 -c rapidsai -c nvidia -c conda-forge \

rapids-blazing=21.06 python=3.7 cudatoolkit=11.0As Aman Arora eloquently explains, running this command creates a new environment that doesn’t include any of the other software that we’ve previously installed, and provides no mechanism for keeping the software up to date. Adding other packages to the environment becomes complex, since version mismatches are very common when combining multiple channels in this way.

I wanted to create packages that depend on RAPIDS, but there’s no real way to make it easy for users to install and update such a package.

I hear a lot of developers telling data scientists that they should create new docker or conda environments for every separate project, and that that’s the correct way to avoid these problems. However, that’s like telling people to install a new operating system for each application they want to use. Imagine if you couldn’t run Chrome and vscode at the same time, but had to switch to a new environment for each! We need to be able to install all the software and libraries we need to do our work, in the same place, at the same time. We need to be able to use them together, and maintain them.

The solution: a new distribution

To avoid these problems we created a new channel and distribution called fastchan. fastchan contains all the dependencies needed to install fastai, PyTorch, RAPIDS, and much more. We use the official PyTorch build of PyTorch, the official NVIDIA build of RAPIDS and CUDA Toolkit, and so forth. The developers of these packages have spent considerable time packaging their software in a way that works best, so we think it’s best to use their work, instead of starting from scratch.

For libraries and dependencies that are only available on conda-forge, we copy those into the fastchan channel. We use a little-known, but very useful, Anaconda command called copy that copies packages across channels.

fastchan uses conda’s own dependency solver to figure out recursively all the dependencies that are needed. We only include dependencies that are not already available in the defaults channel. That’s because defaults is already used by default in Anaconda, so there is no need for us to duplicate what’s already there.

In addition, we package some software that’s currently only available as pip packages on pypi. We use a couple of methods to do this. One is using the terrific setuptools-conda software by fellow Aussie Chris Billington. The other is a new build.py program we wrote, which is used for compiled software such as sentencepiece and OpenCV.

The packages are built and copied automatically twice every day thanks to these GitHub Actions workflows.

The result

The result of all this is that you rely just on the defaults and fastchan channels to install nearly everything you need, especially if you’re working with software in the PyTorch and Hugging Face ecosystems. Even more important than installation is updates – you can now update all your packages at once with a single command! To have this all handled automatically, create a file ~/.condarc containing the following:

channels:

- fastchan

- defaultsThen you can just use conda install and conda upgrade without needing to even pass -c fastchan. If you run conda upgrade --all your entire environment will be brought fully up to date (similar to using sudo apt upgrade to keeping a Ubuntu installation up to date).

Future work

I hope that fastchan will be a useful starting point for folks thinking about Python packaging and deployment. There’s a lot more that could be added to make it better. For instance, currently the only guarantee made by fastchan is that the packages provided can be installed correctly together. It doesn’t actually check that they work correctly. Ideally, integration tests would be run on both CPU and GPU to ensure that code that uses the libraries together gives the expected results.

It would also be great if there were a community-driven way for anyone to request packages get added to fastchan, and also to add their own integration tests. Integration tests are particularly important to ensure that no-one adds or changes a package which causes breakage on dependent packages (or at least to ensure that broken downstream packages are clearly marked as such).

fast.ai, with the mission of making deep learning more accessible, isn’t an obvious home for a conda distribution. We created fastchan because we needed it for ourselves and our users. Hopefully in the future the key players such as PyTorch, NVIDIA, Anaconda, and conda-forge will solve the distribution problem together, and make fastchan obsolete!