Three years ago we pioneered Deep Learning from the Foundations, an in depth course that started right from the foundations—implementing and GPU-optimising matrix multiplications and initialisations—and covered from scratch implementations of all the key applications of the fastai library.

This year, we’re going “from the foundations” again, but this time we’re going further. Much further! This time, we’re going all the way through to implementing the astounding Stable Diffusion algorithm. That’s the killer app that made the internet freak out, and caused the media to say “you may never believe what you see online again”.

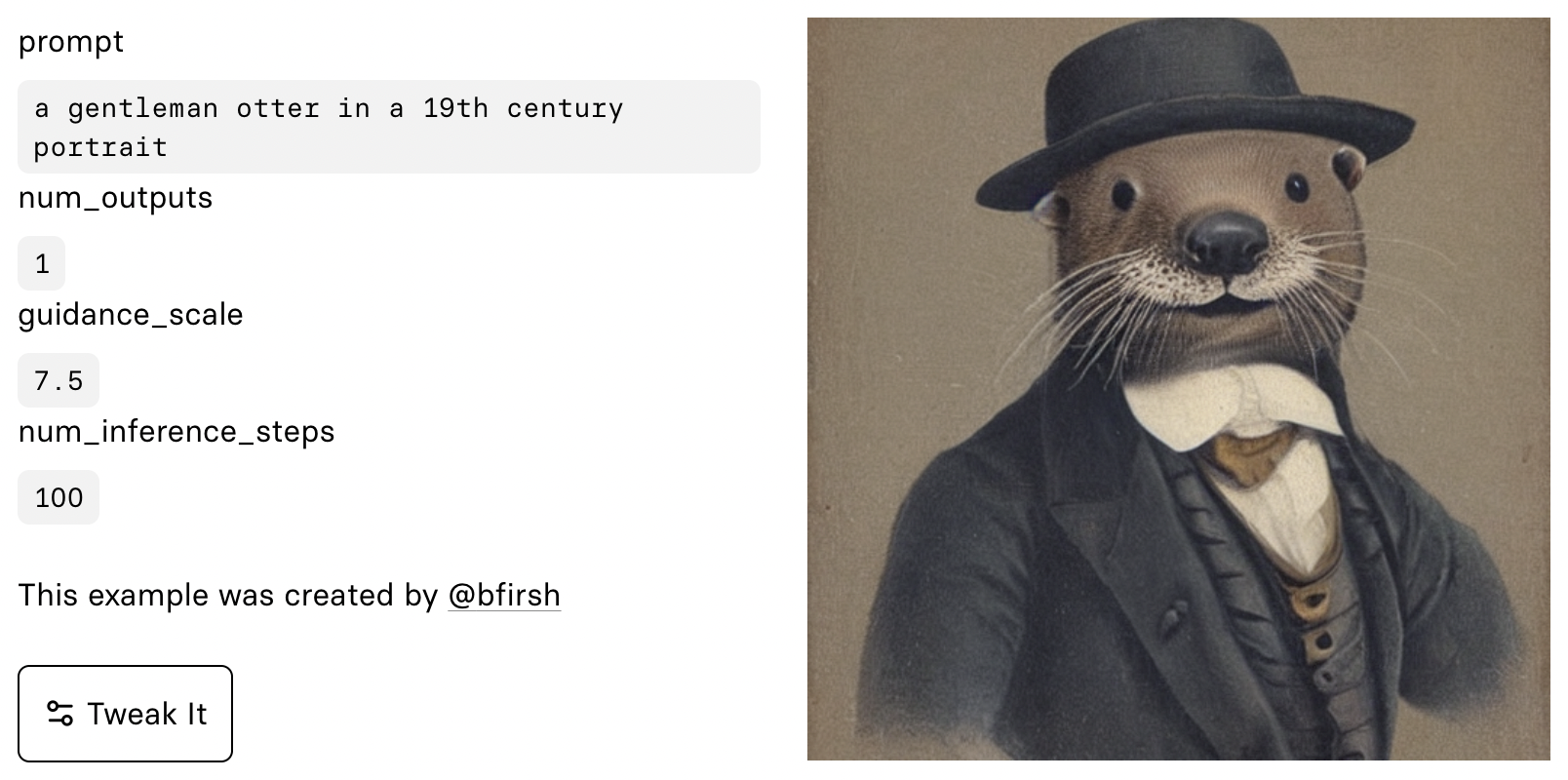

Stable diffusion, and diffusion methods in general, are a great learning goal for many reasons. For one thing, of course, you can create amazing stuff with these algorithms! To really take the technique to the next level, and create things that no-one has seen before, you need to really deeply understand what’s happening under the hood. With this understanding, you can craft your own loss functions, initialization methods, multi-model mixups, and more, to create totally new applications that have never been seen before.

Just as important: it’s a great learning goal because nearly every key technique in modern deep learning comes together in these methods. Contrastive learning, transformer models, auto-encoders, CLIP embeddings, latent variables, u-nets, resnets, and much more are involved in creating a single image.

This is all cutting-edge stuff, so to ensure we bring the latest techniques to you, we’re teaming up with the folks that brought stable diffusion to the world: stability.ai. stability.ai are, in many ways, kindred spirits to fast.ai. They are, like us, a self-funded research lab. And like us, their focus is smashing down any gates that make cutting edge AI less accessible. So it makes sense for us to team up on this audacious goal of teaching stable diffusion from the foundations.

The course will be available for free online from early 2023. But if you want to join the course right as it’s made, along with hundreds of the world’s leading deep learning practitioners, then you can register to join the virtual live course through our official academic partner, the University of Queensland (UQ). UQ will have registrations open in the next few days, so keep an eye on the link above.

During the live course, we’ll be learning to read and implement the latest papers, with lots of opportunity to practice and get feedback. Many past participants in fast.ai’s live courses have described it as a “life changing” experience… and it’s our sincere hope that this course will be our best ever.

To get the most out of this course, you should be a reasonably confident deep learning practitioner. If you’ve finished fast.ai’s Practical Deep Learning course then you’ll be ready! If you haven’t done that course, but are comfortable with building an SGD training loop from scratch in Python, being competitive in Kaggle competitions, using modern NLP and computer vision algorithms for practical problems, and working with PyTorch and fastai, then you will be ready to start the course. (If you’re not sure, then I strongly recommend getting starting with Practical Deep Learning now—if you push, you’ll be done before the new course starts!)

If you’re an alumnus of Deep Learning for Coders, you’ll know that course sets you up to be an effective deep learning practitioner. This new course will take you to the next level, creating novel applications that bring multiple techniques together, and understanding and implementing research papers. Alumni of previous versions of fast.ai’s “part 2” courses have even gone on to publish deep learning papers in top conferences and journals, and have joined highly regarded research labs and startups.